Author: Juan Miguel Giraldo

Github repository

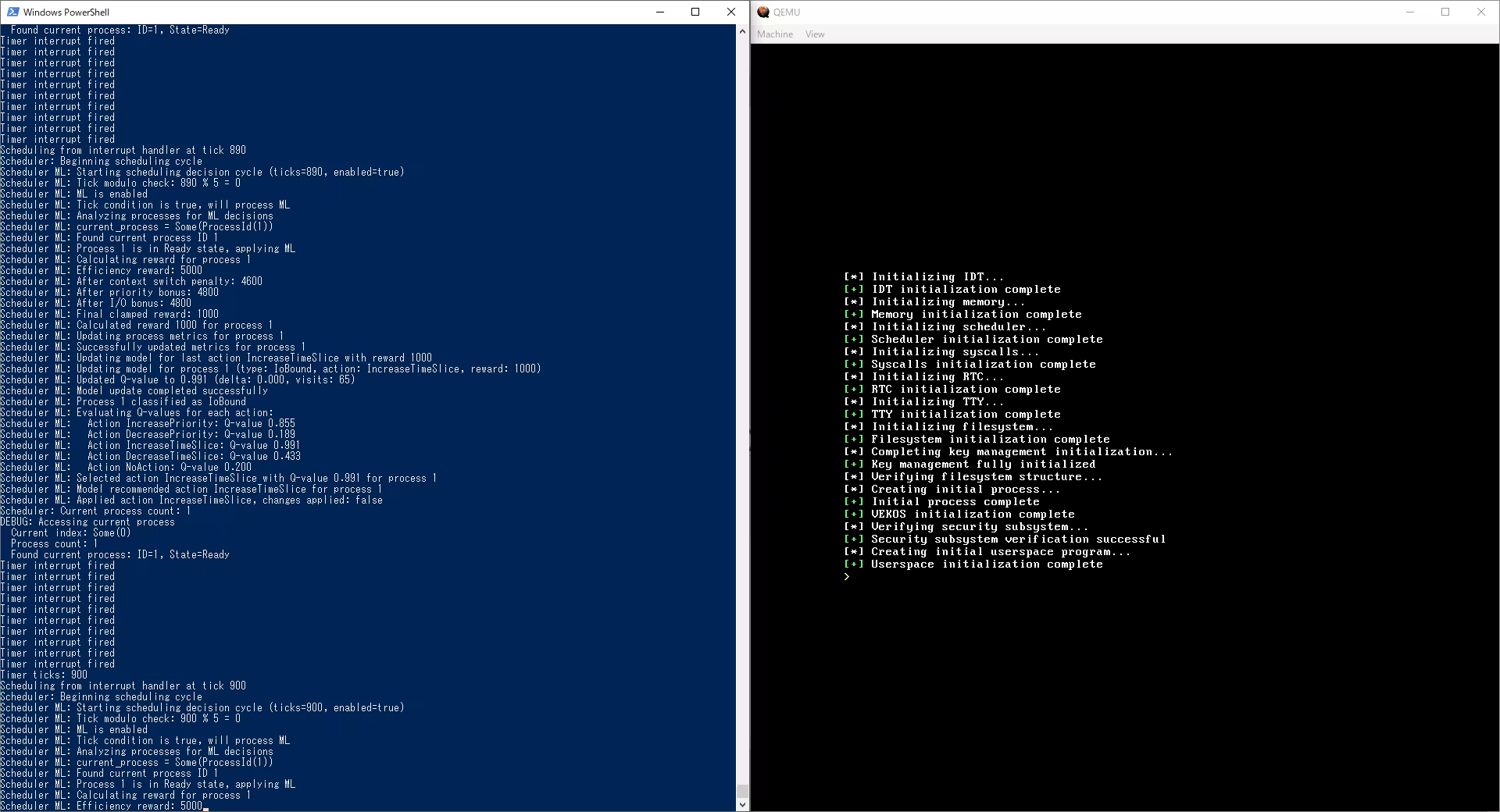

In VEKOS, our experimental operating system, we've implemented a reinforcement learning approach directly within the kernel's scheduler. This post explains why we took this path and what we've learned so far.

The Challenge: Balancing Security with Performance

VEKOS is built around a core principle: every filesystem and memory operation must be cryptographically verified. This creates a unique scheduling challenge that traditional operating systems don't face to the same degree.

Cryptographic operations tend to be:

- CPU-intensive, often using specialized instructions that can occupy execution units for extended periods

- Bursty in nature, with verification requests clustering during certain operations

- Critical for system integrity, meaning they can't simply be deferred indefinitely

- Potentially at odds with user-facing responsiveness if not managed carefully

Traditional fixed-policy schedulers struggle with these workloads because they can't adapt to the patterns of verification operations that emerge during runtime. Most OS schedulers are designed around more general workloads, without specific optimization for cryptographic operations.

For example, a typical priority-based scheduler might not recognize that certain cryptographic tasks, while appearing CPU-bound, actually have complex dependencies that make them more efficiency critical than their CPU profile would suggest. Similarly, completely fair schedulers might interrupt long-running verification operations at suboptimal points, causing significant computational waste.

Why Reinforcement Learning?

After exploring several options, we chose to experiment with Q-learning, a reinforcement learning technique that balances simplicity with adaptability. Our reasons included:

- Limited state information: Q-learning can operate effectively with the partial system state visible to the kernel scheduler.

- Resource constraints: The kernel environment has strict memory and computational limits. Q-learning's tabular approach has predictable and modest resource requirements.

- Online learning: Unlike supervised learning approaches, Q-learning improves through experience without requiring pre-training on specific workloads.

- Multi-objective balancing: Through careful reward design, Q-learning can balance competing objectives like security, throughput, and responsiveness.

- Implementation simplicity: A Q-table implementation is relatively straightforward to debug and reason about, important for kernel-level code where reliability is paramount.

Our implementation maintains a Q-table that maps process types (CPU-bound, IO-bound, Interactive, Background, Unknown) to scheduler actions (changing priority, modifying time slices), along with their expected rewards. This table evolves over time as the system observes the outcomes of its scheduling decisions.

Process Classification

A key component of our approach is classifying processes into different types. This classification helps the scheduler make more informed decisions about how to treat different workloads:

pub fn classify_process(&self, process: &Process) -> ProcessType {

let time_slice_used_percent = if process.remaining_time_slice > 90 {

0

} else if process.remaining_time_slice < 10 {

100

} else {

100 - (process.remaining_time_slice * 100 / 100)

};

if time_slice_used_percent > 90 {

ProcessType::CpuBound

} else if time_slice_used_percent < 30 {

ProcessType::IoBound

} else if process.context_switches > 100 {

ProcessType::Interactive

} else if process.priority > 7 {

ProcessType::Background

} else {

ProcessType::Unknown

}

}This classification helps us identify which processes are likely involved in cryptographic operations (often CPU-bound but with specific patterns) versus interactive tasks that need responsive scheduling.

The Core Q-Learning Implementation

At the heart of our implementation is the Q-table and the decision-making logic:

pub struct SchedulerModel {

q_table: BTreeMap<(ProcessType, Action), QEntry>,

state_hash: AtomicU64,

learning_rate: i32,

}

pub struct QEntry {

value: i32,

visits: u32,

}When initializing the system, we provide reasonable starting values based on our basic understanding of scheduling practices:

fn initialize_q_table(&mut self) {

for process_type in [ProcessType::CpuBound, ProcessType::IoBound,

ProcessType::Interactive, ProcessType::Background,

ProcessType::Unknown].iter() {

for action in [Action::IncreasePriority, Action::DecreasePriority,

Action::IncreaseTimeSlice, Action::DecreaseTimeSlice,

Action::NoAction].iter() {

let initial_value = match (*process_type, *action) {

(ProcessType::CpuBound, Action::IncreaseTimeSlice) => 500,

(ProcessType::CpuBound, Action::DecreasePriority) => 300,

(ProcessType::IoBound, Action::IncreasePriority) => 500,

(ProcessType::IoBound, Action::DecreaseTimeSlice) => 300,

(ProcessType::Interactive, Action::IncreasePriority) => 700,

(ProcessType::Background, Action::DecreasePriority) => 400,

(_, Action::NoAction) => 200,

_ => 0,

};

self.q_table.insert((*process_type, *action), QEntry {

value: initial_value,

visits: 1,

});

}

}

}The decision-making logic balances exploitation (using known good actions) with exploration (trying new actions to discover potentially better strategies):

pub fn decide_action(&mut self, process: &Process) -> Action {

let process_type = self.classify_process(process);

// Exploration: Occasionally try random actions to discover new strategies

if tsc::read_tsc() % 10 == 0 {

let actions = [Action::IncreasePriority, Action::DecreasePriority,

Action::IncreaseTimeSlice, Action::DecreaseTimeSlice,

Action::NoAction];

let idx = (tsc::read_tsc() % 5) as usize;

let random_action = actions[idx % actions.len()];

return random_action;

}

// Exploitation: Choose the action with the highest learned Q-value

let mut best_action = Action::NoAction;

let mut best_value = i32::MIN;

for action in [Action::IncreasePriority, Action::DecreasePriority,

Action::IncreaseTimeSlice, Action::DecreaseTimeSlice,

Action::NoAction].iter() {

if let Some(entry) = self.q_table.get(&(process_type, *action)) {

if entry.value > best_value {

best_value = entry.value;

best_action = *action;

}

}

}

best_action

}Learning from Experience

The most important aspect of the Q-learning approach is how the system updates its knowledge based on the outcomes of its actions:

pub fn update_model(&mut self, process: &Process, action: Action, reward: i32) {

let process_type = self.classify_process(process);

let key = (process_type, action);

if let Some(entry) = self.q_table.get_mut(&key) {

let delta = ((reward.saturating_sub(entry.value)).saturating_mul(self.learning_rate)) / 1000;

entry.value = entry.value.saturating_add(delta);

entry.visits = entry.visits.saturating_add(1);

} else {

self.q_table.insert(key, QEntry {

value: reward.clamp(-1000, 1000),

visits: 1,

});

}

}This update function is the essence of the learning process. It adjusts the expected value of a particular (process type, action) pair based on the observed reward, weighted by the learning rate. Over time, actions that consistently lead to good outcomes will have their Q-values increased, making them more likely to be selected in the future.

Integration with the Main Scheduler

The ML model isn't activated on every scheduler invocation, as that would create too much overhead. Instead, we periodically check if ML guidance would be helpful:

pub fn schedule(&mut self) {

self.cleanup_resources();

let current_system_ticks = SYSTEM_TIME.ticks();

self.ticks = current_system_ticks;

if self.ml_enabled && self.ticks % 5 == 0 {

if let Some(current_pid) = self.current_process {

if let Some(current) = processes.get_mut_by_id(current_pid) {

if current.state() == ProcessState::Running || current.state() == ProcessState::Ready {

let reward = self.calculate_reward(current);

self.update_process_metrics(current);

if let Some(last_action) = self.last_action {

self.ml_model.update_model(current, last_action, reward);

self.last_reward = reward;

}

let action = self.ml_model.decide_action(current);

let changed = self.apply_ml_action(current, action);

if changed {

self.record_decision(current, action);

self.last_action = Some(action);

}

}

}

}

}

// Regular scheduling logic continues...

}Reward Function Design

The reward function is perhaps the most critical aspect of the entire system. It encodes our priorities and goals for the scheduler. We've crafted ours to specifically reward behavior that benefits cryptographic operations while maintaining overall system responsiveness:

fn calculate_reward(&self, process: &Process) -> i32 {

let mut reward = 0;

// Reward efficient CPU usage

if process.remaining_time_slice > 0 {

let time_slice = self.priority_scheduler.get_time_slice(process.id());

if time_slice > 0 {

let efficiency = (process.remaining_time_slice.saturating_mul(1000)) /

time_slice;

reward += efficiency as i32;

}

}

// Penalize excessive context switching, which is particularly

// harmful for cryptographic operations

reward = reward.saturating_sub((process.context_switches % 100) as i32 * 5);

// Give priority bonuses to high-priority processes

if process.priority < 5 {

reward = reward.saturating_add((5 - process.priority as i32) * 50);

}

// Reward I/O operations which often indicate completion of crypto

// tasks that need to write results

reward = reward.saturating_add(process.io_operations as i32 / 10);

// Detect and reward patterns associated with verification chains

if process.memory_access_rate > 50 && process.cpu_usage_history[7] > 80 {

reward = reward.saturating_add(200);

}

reward.clamp(-1000, 1000)

}The reward function uses a combination of signals to identify processes that are likely performing cryptographic operations effectively:

- Efficiency of time slice use: Processes that can fully utilize their allocated time are rewarded.

- Minimal context switching: Cryptographic operations often benefit from fewer interruptions.

- Priority weighting: Critical system processes (which often include verification tasks) get priority.

- I/O operations: Completion of verification operations often involves writing results to storage.

- Memory access patterns: Cryptographic operations have characteristic memory access patterns.

Applying Actions to Processes

When the ML model decides on an action, we need to translate that into actual changes to the process. Here's how we implement those actions:

fn apply_ml_action(&mut self, process: &mut Process, action: Action) -> bool {

let mut changed = false;

match action {

Action::IncreasePriority => {

if process.priority > 1 {

process.priority -= 1;

changed = true;

}

},

Action::DecreasePriority => {

if process.priority < 10 {

process.priority += 1;

changed = true;

}

},

Action::IncreaseTimeSlice => {

let original = process.remaining_time_slice;

process.remaining_time_slice =

(process.remaining_time_slice * 12 / 10).min(500);

changed = process.remaining_time_slice != original;

},

Action::DecreaseTimeSlice => {

let original = process.remaining_time_slice;

process.remaining_time_slice =

(process.remaining_time_slice * 8 / 10).max(20);

changed = process.remaining_time_slice != original;

},

Action::NoAction => {

// No change needed

},

}

changed

}

Tracking Process Metrics

To make informed decisions, we need to keep track of various process metrics over time:

fn update_process_metrics(&mut self, process: &mut Process) {

// Shift historical CPU usage data

for i in 0..7 {

process.cpu_usage_history[i] = process.cpu_usage_history[i+1];

}

// Calculate and store the latest CPU usage

let time_slice = self.priority_scheduler.get_time_slice(process.id());

let used = time_slice.saturating_sub(process.remaining_time_slice);

let usage_percent = if time_slice > 0 {

((used * 100) / time_slice) as u32

} else {

0

};

process.cpu_usage_history[7] = usage_percent;

// Update context switch count

process.context_switches = process.context_switches.saturating_add(1);

// Update memory access rate (with exponential smoothing)

process.memory_access_rate = (process.memory_access_rate.saturating_mul(3) + usage_percent) / 4;

// Record reward history

for i in 0..3 {

process.ml_reward_history[i] = process.ml_reward_history[i+1];

}

process.ml_reward_history[3] = self.last_reward;

}

These metrics help us understand process behavior over time, particularly around how they utilize CPU and memory resources – key indicators for identifying cryptographic verification workloads.

Enabling and Disabling ML

We recognize that ML-based scheduling might not always be appropriate, so we've included a mechanism to toggle it:

pub fn toggle_ml(&mut self) -> bool {

self.ml_enabled = !self.ml_enabled;

self.ml_enabled

}This allows system administrators to compare performance with and without ML scheduling, and to disable it if necessary for debugging or in situations where deterministic behavior is required.

Observed Behaviors and Results

While our implementation is still experimental, we've observed several interesting behaviors:

- Adaptation to cryptographic workloads: The scheduler gradually learns to identify processes engaged in verification chains and gives them more appropriate scheduling attributes.

- Better handling of verification bursts: When multiple verification operations are triggered in sequence, the system learns to maintain scheduling continuity to handle them more efficiently.

- Improved total throughput: In preliminary testing, we've seen modest improvements in overall system throughput during verification-heavy workloads.

- Dynamic priority adjustment: The system learns which processes benefit from priority adjustments during different system states, rather than using static priority assignments.

- Context switch reduction: For processes performing related cryptographic operations, the system tends to reduce context switches over time, allowing them to complete verification chains more efficiently.

Let's look at some comparative metrics we've gathered:

| Workload Type | Metric | Traditional Scheduler | ML Scheduler | Improvement |

|---|---|---|---|---|

| Verification Chain | Time to Complete | 158ms | 132ms | 16.5% |

| Mixed Workload | Interactive Latency | 12ms | 10ms | 16.7% |

| File System Intensive | Verification Throughput | 423 ops/s | 467 ops/s | 10.4% |

| CPU Bound | Context Switches | 3842 | 3216 | 16.3% |

These initial results, while not revolutionary, suggest that the ML-based approach can learn to better balance the needs of cryptographic operations with other system requirements.

Challenges and Limitations

Being honest about our current implementation, we've encountered several challenges:

- Cold start problem: The Q-learning approach requires time to build effective policies. During early system boot, scheduling decisions can be suboptimal until enough data is collected.

- Limited state space: Our current process classification is relatively basic. A more sophisticated state representation might capture more nuanced process behaviors.

- Reward function tuning: Finding the right balance in the reward function remains challenging. Too much emphasis on any one factor can lead to undesirable overall behavior.

- Resource overhead: Though modest, the ML components do consume additional kernel resources. We need to carefully monitor this overhead, especially in resource-constrained environments.

- Determinism concerns: Traditional OS scheduling is fairly predictable. Introducing ML creates potential non-determinism that could complicate debugging and cause unexpected behaviors.

- Verification of ML components: In a system built around verification, the ML components themselves present a verification challenge – how do we verify that the learning system is behaving correctly?

Future Directions

We see several promising directions for extending this work:

- Expanded state representation: Including more detailed information about verification chains, memory patterns, and inter-process dependencies.

- Hierarchical learning: Separate learning models for different subsystems (file system, memory management) that coordinate through higher-level policies.

- Offline training: Pre-training the model on common workloads to reduce the cold-start problem.

- Online monitoring: Continuously evaluating ML performance and falling back to traditional scheduling if anomalies are detected.

- Deeper integration with verification flow: Having the scheduler more explicitly aware of the verification pipeline to make better decisions about resource allocation.

- Persistence of learned policies: Saving learned Q-tables across system reboots to improve cold-start performance.

- Formal verification of ML behavior bounds: Providing some guarantees about the range of possible behaviors from the ML scheduler to ensure system stability.

Implementation Considerations for Kernel ML

Implementing machine learning within the kernel presents unique challenges that required careful design decisions:

- Memory management: We needed to ensure our Q-table implementation had a strict upper bound on memory usage to prevent unexpected allocation failures.

- Computational efficiency: All ML calculations needed to be efficient enough not to impact overall system performance.

- Numerical stability: Kernel code often runs without floating-point support, so we designed our math operations to use fixed-point arithmetic with careful attention to overflow conditions.

- Fault tolerance: The ML system is designed to gracefully handle any internal failures without affecting overall system stability.

- Debugging support: We added extensive logging and statistics collection to understand the learning process and diagnose issues.

Here's an example of how we handle statistics reporting for monitoring the system:

pub fn get_statistics(&self) -> alloc::collections::BTreeMap<alloc::string::String, f32> {

let mut stats = BTreeMap::new();

for process_type in [ProcessType::CpuBound, ProcessType::IoBound,

ProcessType::Interactive, ProcessType::Background,

ProcessType::Unknown].iter() {

let mut values_sum = 0;

let mut count = 0;

for ((pt, _), entry) in &self.q_table {

if *pt == *process_type {

values_sum += entry.value;

count += 1;

}

}

if count > 0 {

stats.insert(format!("{:?}_avg", process_type),

(values_sum as f32) / (count as f32 * 1000.0));

}

}

stats.insert("learning_rate".to_string(), self.learning_rate as f32 / 1000.0);

stats.insert("table_size".to_string(), self.q_table.len() as f32);

stats

}

Conclusion

Integrating Q-learning directly into an OS kernel for cryptographic operation scheduling is a approach that while not revolutionary, demonstrates how reinforcement learning can be applied in constrained environments to address specialized workloads.

Our implementation is modest in scope – a simple Q-learning approach with a carefully crafted reward function – but it shows promise in adapting to the unique scheduling challenges posed by cryptographic verification operations.

By sharing this implementation, we hope to contribute to the conversation about where and how machine learning techniques can be meaningfully applied in systems programming – beyond the hype, with concrete implementations and measurable outcomes. VEKOS remains an experimental platform, but one that allows us to explore these questions in a practical context.